I am just about at the end of my 4th class in the GT OMS CS program - Computational Photography. This was my first summer semester, and I took a two-week vacation in the middle of it, and I had some storm damage to my house during the semester as well - so I was not able to give it as much concentration as it probably deserved. I don't think I'm going to do a summer course again - I don't get to devote the time to the course that I would like to.

For an independent project in the class, I implemented a depth-mapping algorithm to extract depth from a stereo pair of images. I actually had three implementations, one from scratch, one from an OpenCV book, and one from the OpenCV library itself. I spent a good deal of time comparing them and tuning them to build up an intuition of what the parameter values should be for various scenes.

As an example, this is one of the input images in a stereo pair:

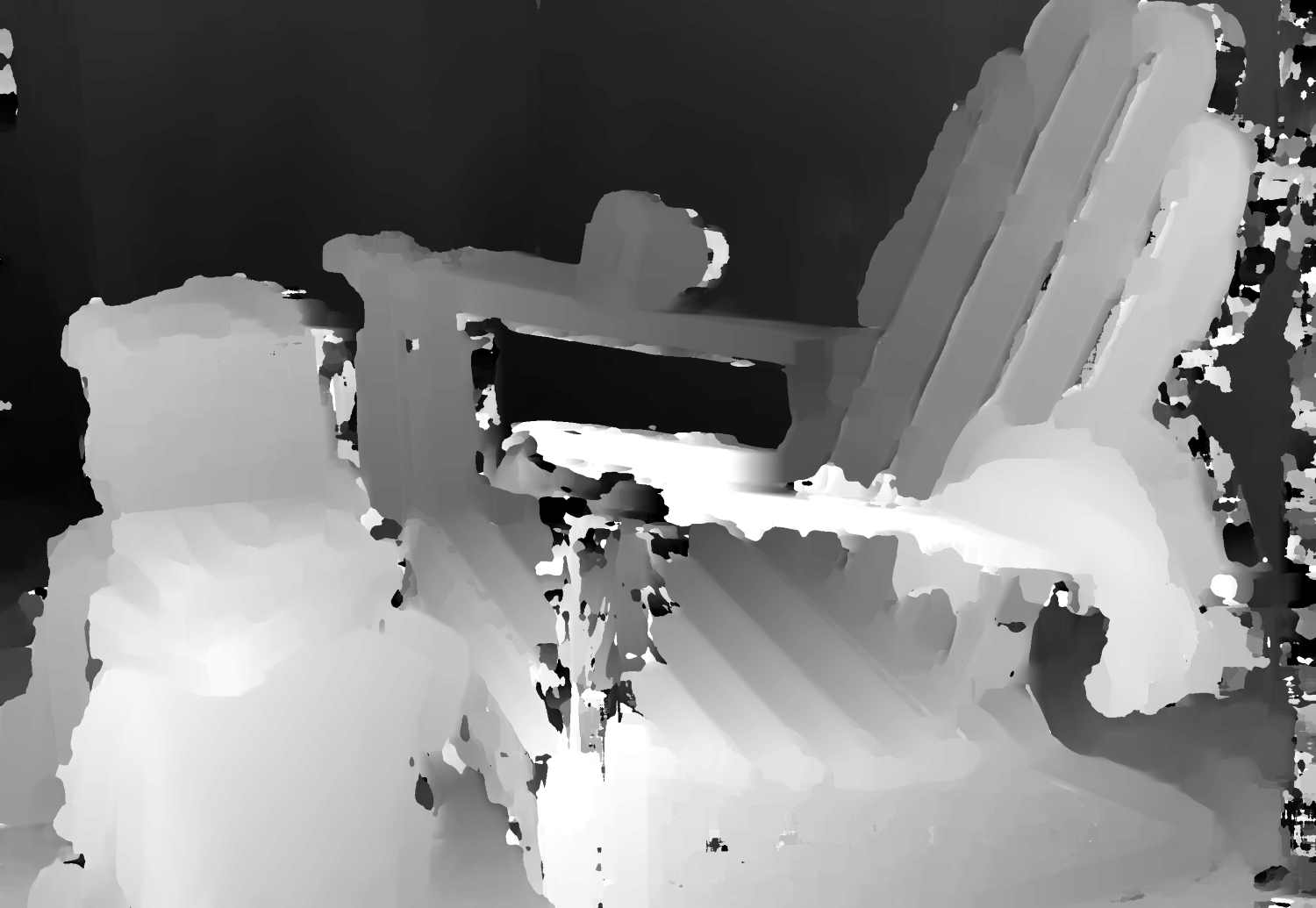

And here is the depth map (technically disparity, not depth) I computed for it, using a normalized cross-correlation-based method:

I created some videos of the output as I cycled through each algorithm and parameter value. They are kind of fun to watch, and more than a little bit trippy.

There is much more detail and images in the full presentation pdf, not shared here for academic integrity reasons. (Sorry!)

Credit for the stereo dataset shown goes to: D. Scharstein, H. Hirschmüller, Y. Kitajima, G. Krathwohl, N. Nesic, X. Wang, and P. Westling. High-resolution stereo datasets with subpixel-accurate ground truth. In German Conference on Pattern Recognition (GCPR 2014), Münster, Germany, September 2014. Found on http://vision.middlebury.edu/stereo/data/scenes2014/ And credit for the normalized cross-correlation algorithm goes to: Solem, Jan Erik. Programming Computer Vision With Python. Sebastopol, CA: O'Reilly, 2012. Print.